- #Artificial Neural Network Model Full Deep Learning#

- #Artificial Neural Network Model Install And Use#

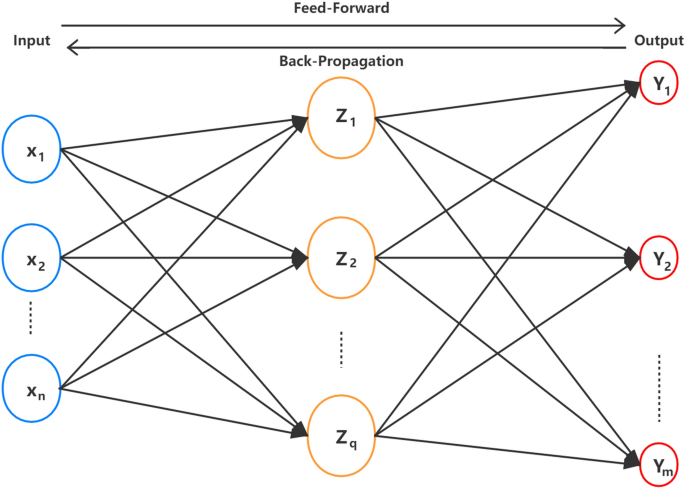

There are many such regularization methods such as Batch Normalization\) range. Group normalization keras Batch Normalization (BatchNorm) is a widely adopted technique that enables faster and more stable training of deep neural networks (DNNs). ANN acquires a large collection of units that are. ANNs are also named as artificial neural systems, or parallel distributed processing systems, or connectionist systems. Artificial Neural Network A N N is an efficient computing system whose central theme is borrowed from the analogy of biological neural networks.

I got exceptions that keras is expecting a "keras tensor". From keras import initializers: from keras import regularizers: from keras import constraints: from keras import backend as K: from keras. Instance Normalization is an specific case of GroupNormalizationsince it normalizes all features of one channel.

Batch Normalization is a technique for training very deep neural networks that standardizes the inputs to a layer for each mini-batch. Centering, normalization, whitening, etc. These examples are extracted from open source projects.

Here you can see we are defining two inputs to our Keras neural network: inputA : 32-dim. Tried adding dimension parameters to the layer declarations, and even tried adding the 'mode', 'axis' parameters to the BatchNormalization, but it does not seem to work. Here, μ is the mean value of the feature and σ is the standard deviation of the feature. Num_steps = 3num_features = 2x_shaped = np.

Artificial Neural Network Model Install And Use

Batch normalization is mostly preferred irrespective of its dependence on batch size. This can be a single integer (single state) in which case it is the size. Based on the GN implementation by shaohua0116 add exponential moving average process about global mean & variance in batch normalization, the mean/variance is different. When the image resolution is high and a big batch size can’t be used because of memory constraints group normalization is a very effective technique. To install and use Python and Keras to build deep learning models.

Awesome-CV is a beautiful CV template in which you only need to change the content. Keras reduces developer cognitive load to. Normalization is required to condition the data. The call method of the cell can also take the optional argument constants, see section "Note on passing external constants" below. Batch normalization applies a transformation that maintains the mean output close to 0 and the output standard deviation close to 1.

Artificial Neural Network Model Full Deep Learning

You can take a slice of 80 out of 256 values, and ignore the other 176 values. This paper presents Group Normalization (GN) as a simple alternative to BN. I came across a strange thing, when using the Batch Normal layer of tensorflow 2. BatchNormalization ()) model. In particular, we will go through the full Deep Learning pipeline, from: Exploring and Processing the Data. GroupNormalization_keras Group Normalization Usage Experiments with group normalization Experiment notebook Blog post Experiment 1: Comparison between BatchNorm, GroupNorm and InstanceNorm Setup Experiment 2: More comparisons a.

However, both mean and standard deviation are sensitive to outliers, and this technique does not guarantee a common numerical range for the normalized scores. Types of normalization commonly used are batch normalization , layer normalization , instance normalization , group normalization , weight normalization , cosine normalization , etc. As a result, a deep learning practitioner will favor training a. And if you haven’t, this article explains the basic intuition behind BN, including its origin and how it can be implemented within a neural network using TensorFlow and Keras. Despite its pervasiveness, the exact reasons for BatchNorm's effectiveness are still poorly understood. In this forward pass, the Batch Normalization statistics are updated.

We can say that, Group Norm is in between Instance Norm and Layer Norm. 8 is suggested in a paper (retain 80%), this will, in fact, will be a dropout rate of 0. However, you may opt for a different normalization strategy. Useful for fine-tuning of large models on smaller batch sizes than in research setting (where batch size is very large due to multiple GPUs).

NUS Social Media Text Normalization and Translation Corpus. The overall batch size should be an integer multiple of virtual_batch_size. Step 5 - Load up the weights into an ecoder model and predict. Farmer Field Schools and Farmer Group Approach also as the Agricultural. Group Normalization(GN) divides the channels of your inputs into smaller sub groups and normalizes these values based on their mean and variance.

Each of our layers extends PyTorchs neural network Module class. Create our own custom augmentation functions which modify the images as well as the bounding boxes.The visualization is a bit messy, but the large PyTorch model is the box thats an. A Keras implementation of Group Normalization by Yuxin Wu and Kaiming He. Please provide details as to the parameters utilized in the group normalization layers. Γ and β are learnable parameters.

0 kommentar(er)

0 kommentar(er)